What is caching?

In a nutshell

Caching is the technique of storing frequently used data in an intermediate data source so that after the initial retrieval from the original data source, subsequent retrievals are fetced from the intermediate source, instead of the original data source.

Example 1: Caching database data in Redix

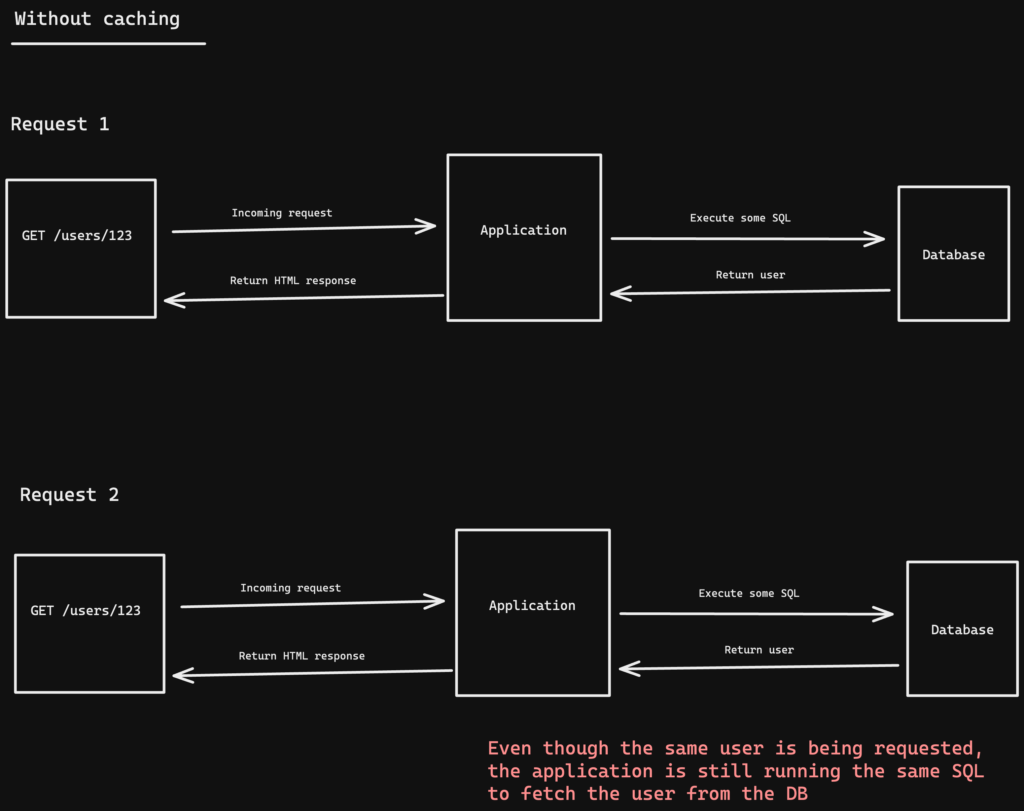

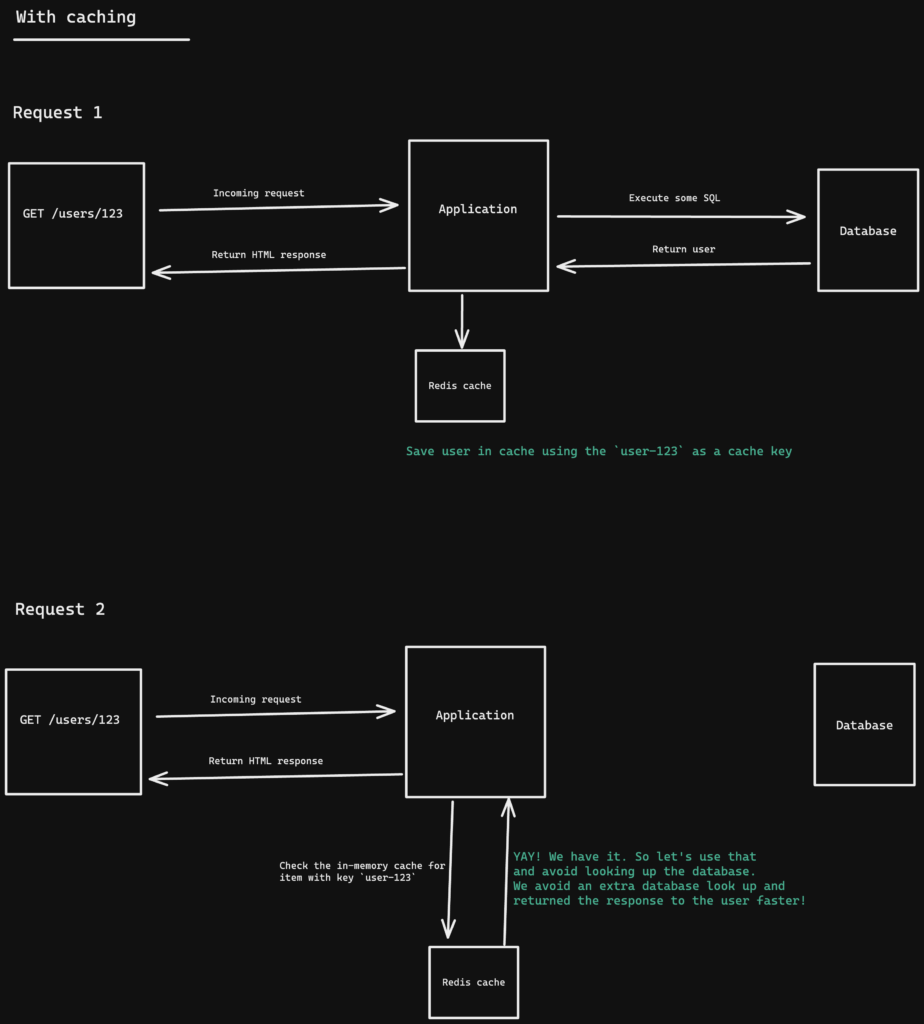

You might have a web application that needs to retrieve some data (e.g., a user record from a MySQL table) when a user visits https://acme.com/users/1 . The first time there’s a HTTP request for https://acme.com/users/1, you fetch the user record from MySQL and then store the response in Redis. You store the user record using a unique key like users-1.

On subsequent requests to https://acme.com/users/1, your application checks Redis for an entry with the key users-1. It finds an entry so it returns that entry from Redis. There’s no need to query the (slower) MySQL database.

Retrieving data from in-memory is typically much faster than from a relational database or most other sources.

Example 2: Caching HTTP responses in a CDN

A request comes for https://example.com/cat.png and goes to your origin server (e.g., a PHP & Nginx instance). Your CDN (e.g., Cloudflare) intercepts the response from the server and stores the image in its cache using a unique identifier like example.com-cat.png. The CDN then forwards the server response so that the user sees the cat image.

On a subsequent request to https://example.com/cat.png, the CDN will check if it has a cache entry for that URL. It does, so it returns the cached response. The request doesn’t need to go to the origin server again. CDNs often have multiple data centers around the world so they’re usually closer to clients than origin servers. This means responses are served quicker.

An application without caching

An application with caching

Thanks for your comment 🙏. Once it's approved, it will appear here.

Leave a comment